Kappa Calculator

Provides the ability to calculate a Kappa Statistic based upon two columns.

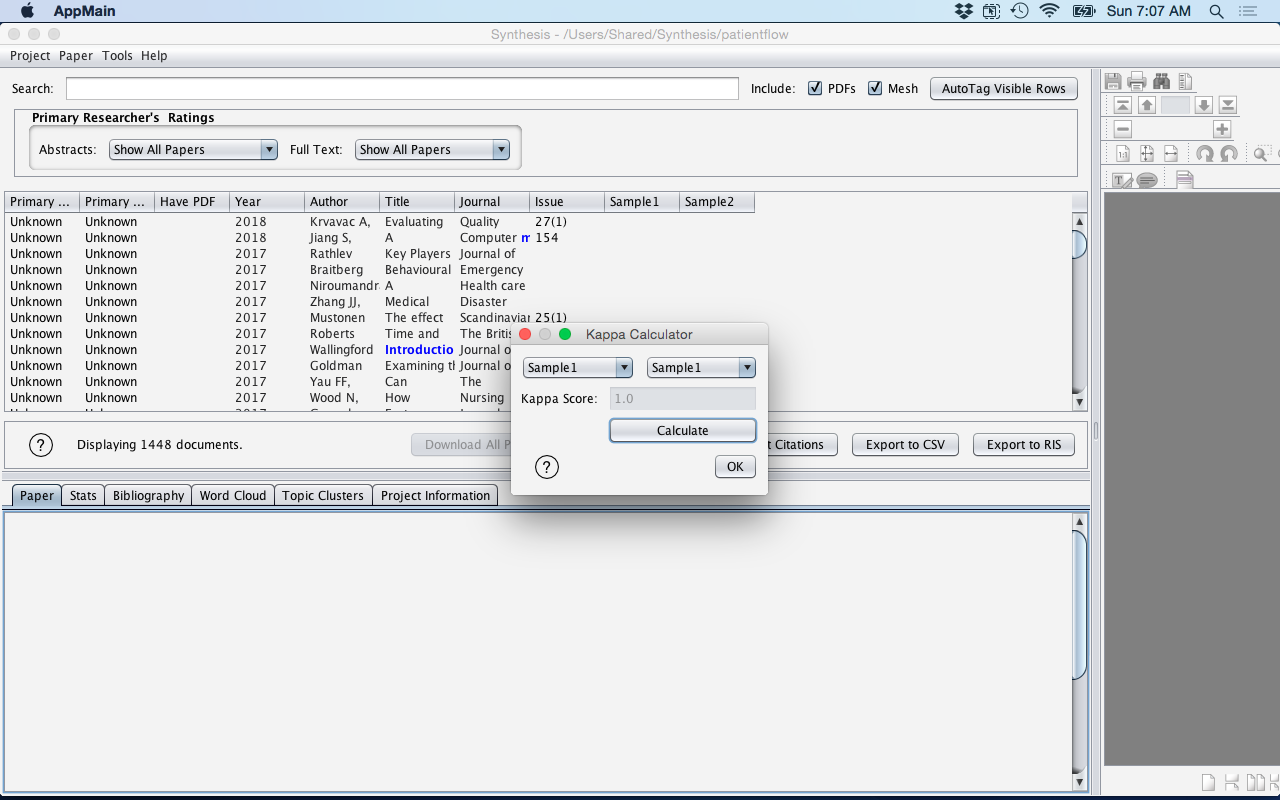

Synthesis Main Window Menu: Tools > Kappa Calculator

A Kappa statistic measures inter-rater agreement. It is a measure of how much consensus there is between two raters.

The Kappa Calculator allows two user defined custom columns to have a Cohen's Kappa Score calculated between two columns. The columns need to be populated with the keyword 'Yes' and blank to represent the 'No' value.

To use the Kappa Calculator:

- Select Column1

- Select Column2

- Press the Calculate button

Figure: Kappa Calculator - Comparing the same column

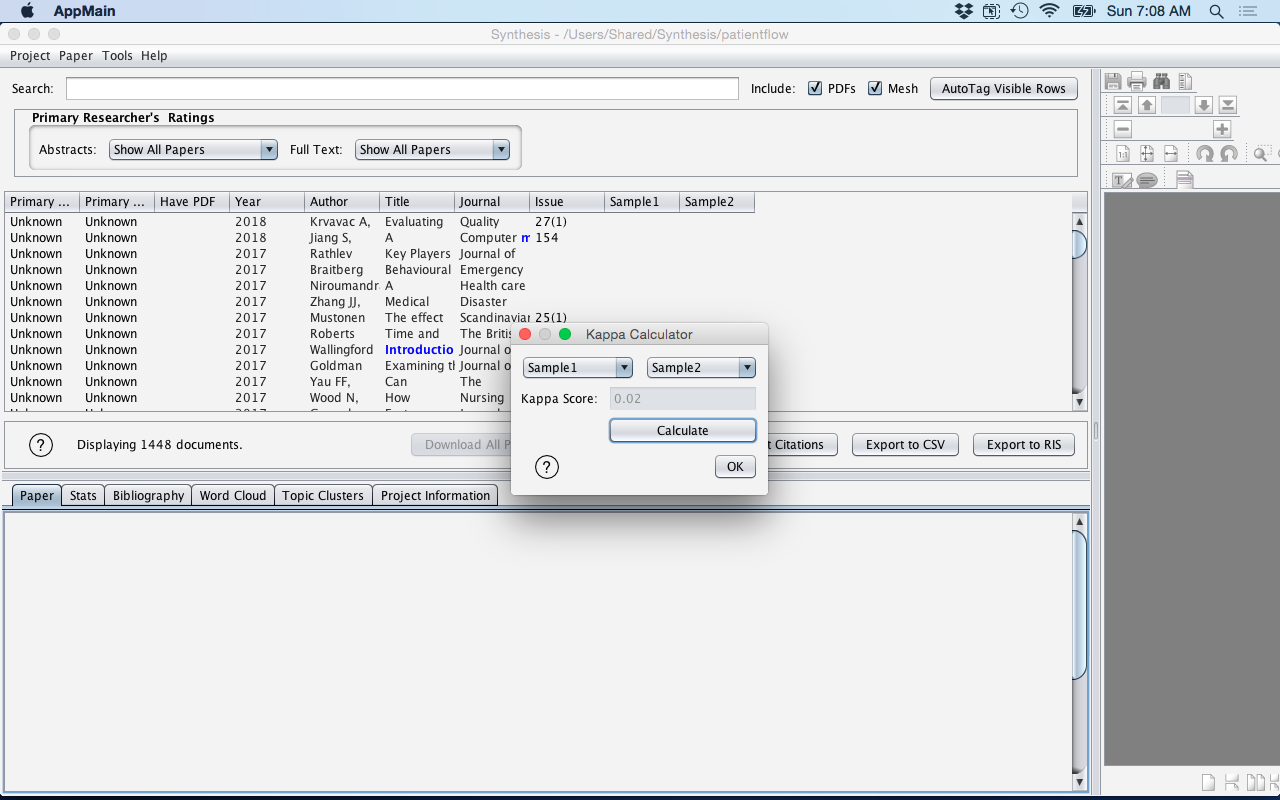

| Kappa Score | Strength of Agreement (Altman 1991) | Strength of Agreement (Viera 2005) |

|---|---|---|

| < 0 | Less than chance agreement | |

| 0.01 - 0.20 | Poor | Slight agreement |

| 0.21 - 0.40 | Fair | Fair agreement |

| 0.41 - 0.60 | Moderate | Moderate agreement |

| 0.61 - 0.80 | Good | Substantial agreement |

| 0.81 - 1.00 | Very Good | Almost perfect agreeement |

Figure: Kappa Calculator - Comparing two different columns

References:

- McHugh ML. Interrater reliability: the kappa statistic. Biochem Med (Zagreb). 2012 Oct; 22(3): 276–282.Published online 2012 Oct 15. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3900052/

- Viera AJ, Garrett JM. Understanding Interobserver Agreement: The Kappa Statistic. Fam Med 2005;37(5):360-3. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.483.429&rep=rep1&type=pdf

- Altman DG. Practical statistics for medical research. London: Chapman and Hall. (1991)